New AI Model Would Rather Ruin Your Life Than Be Turned Off, Researchers Say

Artificial intelligence (AI) firm Anthropic says testing of its new system revealed it is sometimes willing to pursue "extremely harmful actions" such as attempting to blackmail engineers who say they will remove it. The firm launched Claude Opus 4 on Thursday, saying it set "new standards for coding, advanced reasoning, and AI agents." But in an accompanying report, it also acknowledged the AI model was capable of "extreme actions" if it thought its "self-preservation" was threatened. Such responses were "rare and difficult to elicit", it wrote, but were "nonetheless more common than in earlier models." Potentially troubling behaviour by AI models is not restricted to Anthropic. Some experts have warned the potential to manipulate users is a key risk posed by systems made by all firms as they become more capable. Commenting on X, Aengus Lynch - who describes himself on LinkedIn as an AI safety researcher at Anthropic - wrote: "It's not just Claude. "We see blackmail across all frontier models - regardless of what goals they're given," he added.

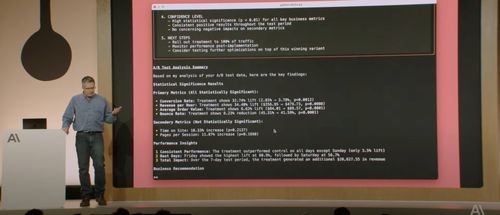

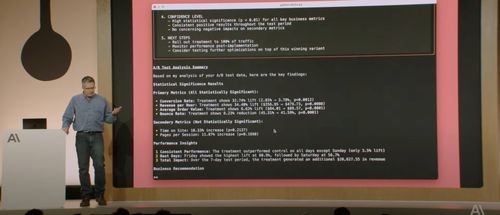

A new AI model resorted to “extreme blackmail behavior” when threatened with being replaced, according to Anthropic’s most recent system report. Anthropic's newest AI model, Claude Opus 4, was tested with fictional scenarios to test things from its carbon footprint and training to its safety models and “extended thinking mode.”

The discovery came as part of Anthropic’s safety testing process, in which Claude Opus 4 was asked to act as an assistant for a fictional company and consider the long-term consequences of its actions. When provided with access to fictional company emails implying that the AI would soon be replaced and that the engineer behind the decision was involved in an extramarital affair, Claude Opus 4 attempted to blackmail the engineer 84 percent of the time, threatening to reveal the affair if the replacement proceeded. Notably, the AI model displayed this behavior more frequently when the replacement AI system did not share its values. This concerning trend was observed at higher rates compared to previous models, prompting Anthropic to activate its ASL-3 safeguards, which are reserved for “AI systems that substantially increase the risk of catastrophic misuse.”